I have just posted a blog on linkedin about business and IT strategy. I say a bit more here! This was provoked because I was doing some research for a job application which involves IT strategy. I was considering the alignment of business strategy with that of the IT department and what I might say. I outlined three models, although they were all developed a while ago, I think they all have relevance today. The three models address business strategy, software portfolio management and architectural pattern selection. Business strategy should drive portfolio and project management choices. While business strategy will outline how to do what must be done, it also defines what will not be done. Portfolio management determines the allocation of development funding, priority, maintenance funding, project risk appetite, people skills, project governance and software sourcing policy and as result of choices made, one can select the appropriate platform super architectures, of which you may need more than one. I conclude that theory matters. See more below/overleaf …

I have always been influenced by the Value Disciplines framework defining the super strategies of companies. This was developed by Treacy and Wiersema who observed that companies could pursue one of three super strategies, operational efficiency, product leadership or customer intimacy. They also observed that successful companies planned to be the best in one of these strategies and just good enough in the other two. It is felt that it is not possible to achieve world class excellence in all three strategies and thus strategy at this level demands a choice and then ruthless focus.

A second policy model, aimed more at the software portfolio level was shown to me by Dan Remenyi, where one plots elements of the software portfolio as providing competitive advantage vs. the degree of dependence on the IT. This modelling then allows the classification of the applications and thus the application of various management policies. Remenyi names the classes as Development, Strategic, Factory and Support. The management policies will include the allocation of development funding, IT development priorities, maintenance funding, project risk appetite, people skills, project governance, software sourcing policy (buy vs build) and today, hardware sourcing (buy, rent, cloud vs. on-prem.) policies. What’s interesting about this technique is that it permits the management of software projects to have different governance policies depending on the nature of the business as well as the class of application; a professional services company will be highly reliant on its personnel systems, particularly its training acquisition, time management and skills databases, whereas organisations with more homogenous staffing requirements will place a different priority on their personnel systems and the required functionality. A key insight is that a company should adopt different project management strategies depending on the classified role of the software system. The fact that different people’s skills also suit them to manage different types of project was also a relief and at the time something new to me as managements seemed to be looking for a single model of good project managers failing to understand that some projects need technologists, some need risk takers and others need a safe pair of hands. This model allows, because of the competitive advantage dimension, the business strategy choices to be reflected in software portfolio management policies.

What prompted me to write this though was remembering a solutions classification technique used at Sun Microsystems in its dying days. Some of this was influenced by the then Chief Technologist, Greg Papadopolus’s “Red Shift”[1] theories where he argued that there were solutions that needed grow faster than Moore’s Law and some that didn’t. Moore’s Law was important because it defined the workload that one CPU or a single computer system could do. Business IT was generally driven by gross economic growth measured, when lucky, in percentage points; there were other applications that needed to scale with the increasing demand for network bandwidth which was either geometric or exponential. Sun gathered these into three families of application, arguably arguing that they required specific flavours of super architectures or architectural patterns each with their own strategic goals.

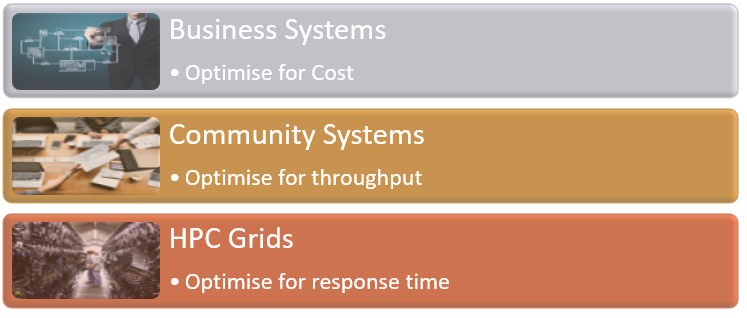

Today I would describe the systems as business systems, community systems and High Performance Computing. The business systems consist of ERP, Financials, and HR systems, and the goals were on the whole be to optimise cost and pursue consolidation strategies. Storage was often an important part of these solutions designs and the location of the first copy of the data equally important; data was slow and expensive to move[2]. The classification was developed at a time that Cluetrain was popular, but community systems required scalable web/app servers and databases[3] in some cases at massive scale. They would typically be extended to customers and/or suppliers. The key goal of the design was to optimise for throughput. The HPC market solutions[4] were at the time architected as if it was CPU constrained, but the bottleneck soon moved or was already moving to the network. Sun had identified that CPUs needed to go multi-core, multi-threaded and its intel competitors caught up quickly enough as did the graphics card manufacturers, all this impacting HPC Cluster design; it was a window of opportunity[5] which closed quite rapidly. The key goal for designing HPC architectures was response time. There was not much call for HPC computing in business, as shown by Oracle’s decision to offload all the HPC software products on taking over Sun, although hi-tech manufacturing and biosciences entities still have a need for it.

One thing that interests me is that the challenges of the time led to changes in architecture, design patterns and even chip design. Hardware prices were falling, network capacity was growing and costs decreasing. The community architectures utilised distributed database solutions to provide sufficient throughput and user concurrency. The distributed database architectures were adopted and enhanced primarily to avoid the large costs of scale up database platforms. The scale-up solutions were further undermined by the effectiveness of the scale-out solutions at least in terms of throughput. A second factor was that there were companies whose requirements were beginning to stretch the capability of SQL/RISC platforms; the scale of their problems led them to begin to develop alternative database/data management solutions. These requirements were varied from Google’s search index, Amazon’s product catalogue and interestingly Betfair’s biggest markets. While the chip designers, using chip multi-threading shrank SMP servers onto the chip, the increasing LAN speeds allowed solutions architects to combine multiple so-called commodity systems into effective clusters serving databases or HPC algorithms. The storage systems designers also complicated matters by sedimenting functionality into the storage unit controllers and in several cases adopting Linux or even Solaris as the storage unit O/S. All this allowed the solutions designers to combine CPUs, RAM, system buses and networks as they chose; for short while, systems design moved to solutions architecture space.

Why bother with this theory and history? I firmly believe that what needs to be done should be guided by a theory of reality. It’s important to know stuff, what works and what doesn’t. Another important planning/management tool for I.T. operations and maintenance activities is the “Plan, Do, Check, Adjust” planning tool. In order to run a complete IT department having an idea of how to plan it is essential and the obvious off the shelf regime is COBIT. For smaller organisations it might be a bit heavy weight but as compliance burdens increase and firms require to prove adequate safeguards, the demand for a testable management process will increase.

Theory matters, it guides great practice. Common sense, prejuidice and/or the feel of your gut is not good enough.

ooOOOoo

[1] The Red Shift paper can also be found as a.pdf on my wiki.

[2] Time to review if this is still true; certainly, some cloud solution’s pricing model make it so.

[3] Distributed partitioned databases and LAN speeds were just reaching the level of maturity and performance that allowed smaller cheaper systems to co-operate in delivering a single database to a huge range of users. (This is not the first time that the cost of VLDB platforms has made distributed computing databases attractive for solutions designers.)

[4] HPC (High Performance Computing) systems were clusters of systems designed to perform compute intensive parallelisable algos. These were often used in simulations be they financial, scientific, physics or engineering problems.

[5] Today’s HPC systems are mixed CPU architectures, IA64 & GPU with proprietary networks.

Image Credit: From here, stored and processed for the usual reasons